Automated knowledge quality with Knowledge Agents

Automatically verify and unverify knowledge—so your AI and your teams always work from what’s accurate.

As companies connect more knowledge into AI search, chat tools, and agents, one problem gets amplified fast: outdated and incorrect information. Enterprise search can index everything—but it can’t tell you what’s right.

Knowledge Agents solve this by automating knowledge quality.

They continuously evaluate the knowledge powering your answers—using real usage signals, content context, and policy-driven rules—to automatically verify what’s trustworthy and unverify what’s not.

This is how you keep your AI Source of Truth accurate at scale—without endless manual reviews.

How Knowledge Agents improve knowledge quality

Traditional verification relies on periodic, manual reviews that only touch a small fraction of company knowledge. As content grows and AI usage increases, this approach breaks down quickly.

With Knowledge Agents, verification shifts from a reminder-driven task to a continuous, automated system.

Knowledge Agents evaluate content as often as you need and take action based on how your knowledge is actually used—so accuracy improves over time, without placing more burden on subject matter experts.

They can:

- Verify content that’s actively used, trusted, and up to date

- Unverify content that’s outdated, flagged, or no longer relevant

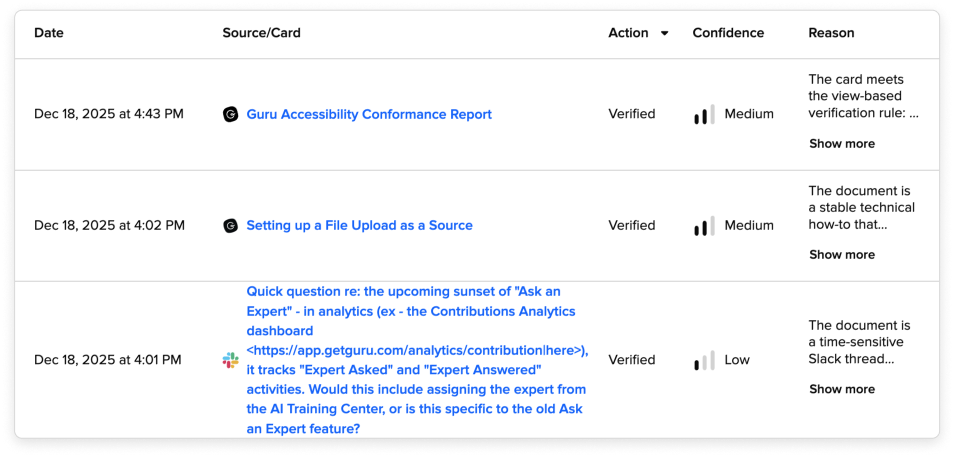

- Explain every decision with clear reasoning and confidence levels

- Log all actions for auditability and human oversight

All without disrupting permissions or requiring SMEs to constantly intervene.

How it works

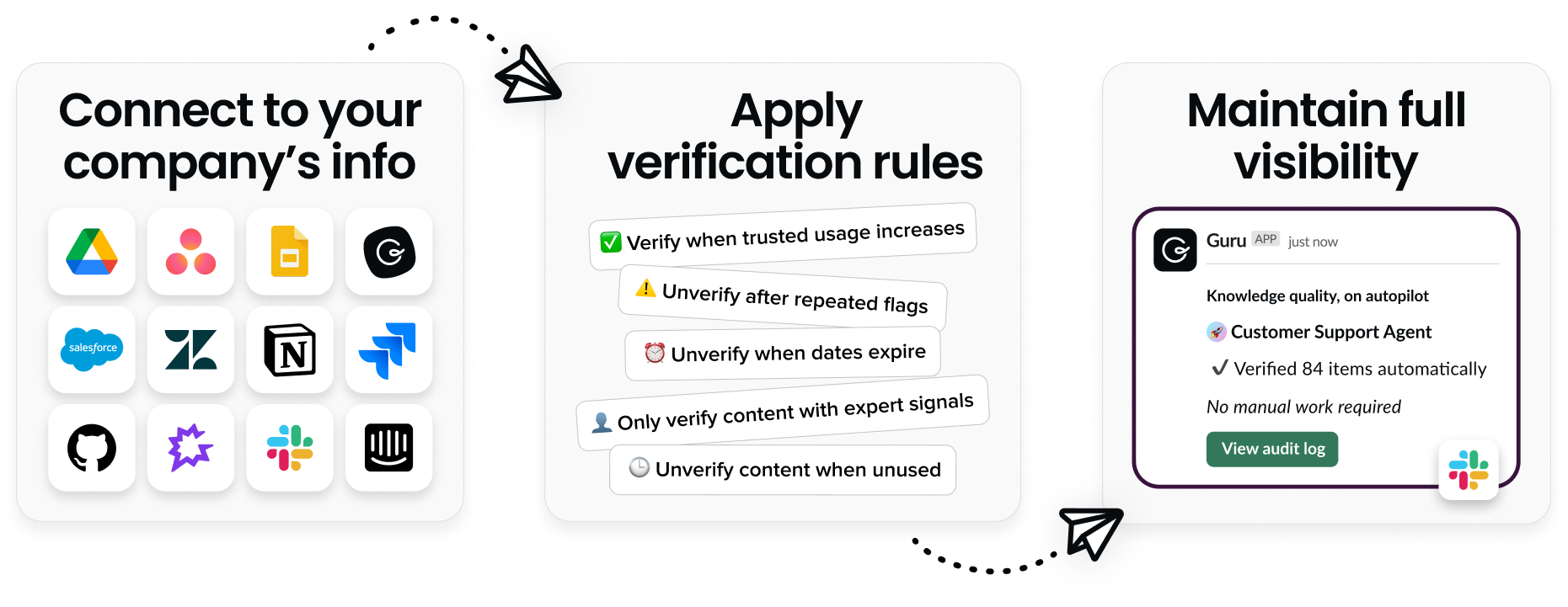

At a high level, automated knowledge quality with Knowledge Agents is built on three pillars:

Connect to your company’s info → Apply verification rules → Maintain full visibility

How Knowledge Agents operate

Once enabled, Knowledge Agents run continuously in the background to keep your knowledge accurate, current, and trustworthy—without manual intervention.

1. Focus on knowledge that matters

Knowledge Agents prioritize the content that’s actively powering answers, rather than attempting to review everything at once. This ensures automation is applied where accuracy has the greatest impact.

2. Evaluate quality using real signals

Knowledge Agents assess content using multiple signals together, including:

- Behavioral signals — usage, views, feedback, and expert interactions

- Content signals — time sensitivity, freshness, and relevance

- Analytical patterns — engagement trends over time

This mirrors how humans judge quality, but operates continuously at enterprise scale.

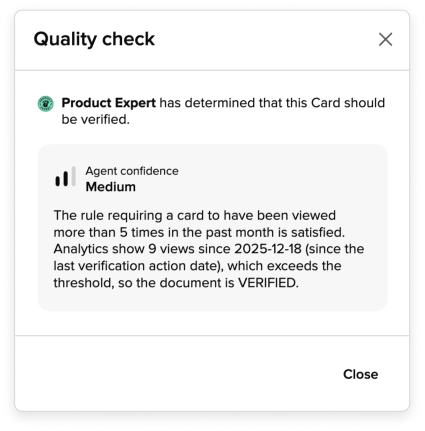

3. Take action with transparency

When content is verified or unverified:

- The action is logged

- The reason is clearly explained

- A confidence level is shown

- Humans can review or override decisions at any time

Automation reduces manual work without bypassing permissions, audit logs, or human review.

What happens to your knowledge

Verified content

- Explicitly marked as trusted

- Used confidently in answers, chat, and research

- Powers AI tools and agents with accurate context

Unverified content

- Can be automatically excluded from answers and search results

- Prevents employees and AI tools from relying on outdated information

- Surfaces exactly what needs attention—without unnecessary noise

Built for humans and AI

Automated knowledge quality doesn’t just protect employees—it protects every AI system connected to Guru.

Because Guru is the AI Source of Truth:

- Verified knowledge improves answers inside Guru

- The same trust extends to Slack, Teams, and browser workflows

- External AI tools and agents (via MCP) rely on the same verified foundation

Correct something once—and it’s right everywhere.

Why this matters

From manual upkeep to trust on autopilot

Without automation:

- Subject matter experts are overwhelmed by review requests

- Verification backlogs grow

- AI answers quietly drift from reality

With Knowledge Agents:

- Verification happens continuously in the background

- Experts focus only where judgment is truly needed

- AI stays grounded in accurate, current context

This isn’t about removing humans. It’s about removing unnecessary work—while increasing trust.

Key outcomes

- Significantly reduce manual verification work

- Improve AI answer accuracy across every surface

- Prevent outdated knowledge from spreading

- Maintain auditability, permissions, and control

- Scale governance as AI adoption grows

FAQs

No. Knowledge Agents reduce manual work, but they don’t remove human oversight. Automation proposes verification and flags potential issues using real usage and content signals, while experts and admins retain full visibility and control to review, override, or adjust decisions at any time.

Knowledge Agents evaluate content using a combination of usage signals, content context, and policy-driven rules. Every action includes a clear explanation and confidence level, so teams can see exactly why content was verified or unverified.

Unverified content is clearly identified and can be automatically excluded from answers and search results. This prevents employees and AI tools from relying on outdated or questionable information, while keeping content available for review or updates.

Knowledge Agents evaluate the knowledge that powers your answers—whether it lives in Guru or in connected systems—while always respecting existing permissions and access controls.

Enterprise search indexes content but doesn’t evaluate its accuracy. Knowledge Agents continuously assess knowledge quality, verify what’s trustworthy, and prevent outdated information from spreading—so both employees and AI tools rely on information that’s actively validated.

.png)